AI Video Generation

Evidence cutoff: Verified through December 2025, drawing primarily from independent benchmarks (e.g., Artificial Analysis Video Arena, VBench), technical reports from model developers (e.g., OpenAI system cards, Google DeepMind publications), and peer-reviewed or audited analyses. Excluded: Vendor marketing claims, unverified forecasts, non-replicable anecdotes, and developments occurring after 2025 (presented solely as conditional scenarios when applicable).

Source classes included independent benchmarking datasets, model architecture descriptions from arXiv preprints, and administrative disclosures (e.g., API documentation, safety reports). Secondary syntheses are separated from primary evidence; weak or indirect evidence (e.g., self-reported user evaluations) is flagged explicitly.

Uncertainty acknowledgment: Self-selection bias in benchmark participation (leading models opt in); survivorship bias (failed deployments rarely publicized); publication bias (positive results overemphasized). We should recognize that a lack of evidence on long-term enterprise outcomes doesn’t prove those outcomes are impossible; decisions are made under partial observability and asymmetric risk (e.g., reputational harm from failures outweighs gains from successes).

Image integration (1/3):

Barriers to Industry Adoption of AI Video Generation Tools: A …

This diagram from a 2024 industry analysis illustrates barriers to AI video tool adoption (measured via qualitative surveys of creative professionals; unknown: quantitative deployment rates or ROI). Analytically, it separates measured technical limitations (e.g., consistency issues) from unmeasured organizational frictions (e.g., workflow integration), highlighting why high-fidelity generation alone does not guarantee enterprise uptake.

Current Landscape (Q4 2025 Advancements)

(Observed outcome) By late 2025, closed-source models dominate fidelity benchmarks. Runway Gen-4.5 holds the top position on the Artificial Analysis Video Arena leaderboard (independent human preference evaluations, scope: text-to-video tasks, population: aggregated user votes, time horizon: December 2025). Google Veo 3.1 and OpenAI Sora 2 follow closely, with Veo excelling in native audio integration (synchronized dialogue and effects) and Sora in physical accuracy (e.g., realistic failures in motion).

(Expert interpretation) Open-source models narrow the gap but lag in consistency and audio. Hugging Face evaluations (self-reported benchmarks, indirect evidence) say that the Wan2.2 series (MoE architecture for efficiency) and Mochi 1 (high-fidelity motion) are two of the best examples. Acceleration frameworks like TurboDiffusion (open-sourced December 2025) enable near-real-time inference on optimized hardware, but quality trade-offs remain (observed: 100-200x speed with minimal loss in lab tests; unknown: real-world enterprise variability).

(Contested finding) Claims of “cinematic” quality are mixed; human raters prefer closed models for narrative coherence, but open models suffice for short-form tasks (VBench dimensions are contested due to prompt sensitivity).

Image integration (2/3):

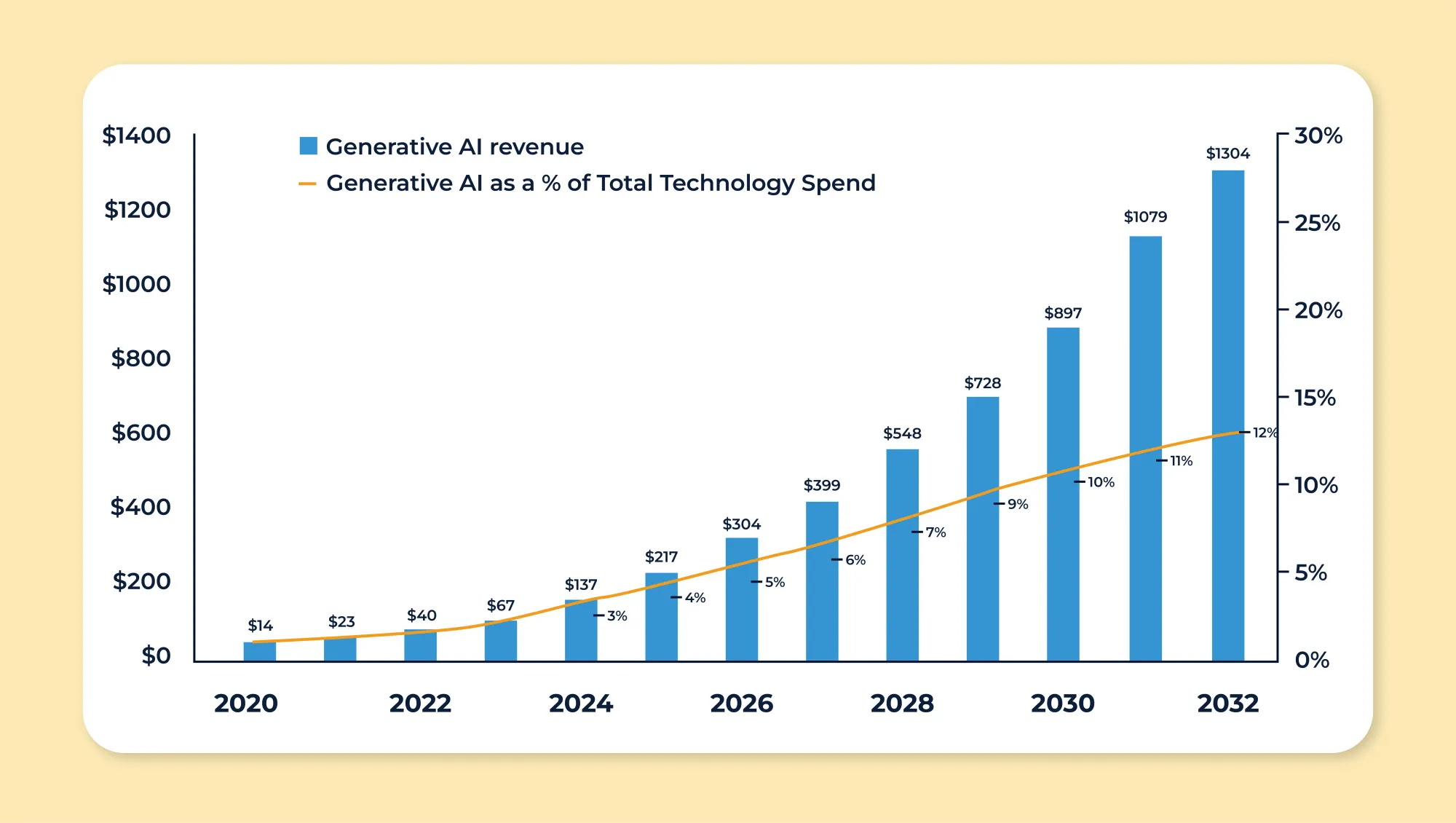

Generative AI in 2025: Exploration of All Gen-AI Models

This 2025 generative AI model overview timeline visually tracks advancements from 2024 previews (e.g., Sora initial) to late-2025 releases (e.g., Veo 3.1, Ray3). Analytically, it clarifies measured progress in multimodal integration (audio/video) versus unmeasured gaps in enterprise-ready controls (e.g., governance, scalability).

Trade-offs in Organizational Use

| Dimension | Closed-Source (e.g., Sora 2, Veo 3.1, Runway Gen-4.5, Kling 2.6, Luma Ray3) | Open-Source (e.g., Wan2.2, Mochi 1, Open-Sora 2.0) | Measured Outcomes | Unmeasured Outcomes |

|---|---|---|---|---|

| Fidelity & Consistency | High (top benchmarks; character/scene persistence) | Improving but variable (MoE helps efficiency) | Human preference scores (Video Arena) | Long-term narrative coherence in production pipelines |

| Control & Customization | API-level (prompt adherence, reference images) | Full weights (fine-tuning possible) | Prompt following (VBench) | Integration with proprietary data/workflows |

| Cost & Scalability | Subscription/API (per-second pricing) | Hardware-dependent (consumer GPUs viable for some) | Inference speed (TurboDiffusion labs) | Total ownership costs in regulated environments |

| Governance & Risk | Built-in watermarks, moderation (observed in deployments) | Community-dependent | Compliance disclosures | Liability in misuse cases |

| Audio Integration | Native (Veo 3.1, Sora 2, Kling 2.6) | Limited/post-hoc | Sync accuracy (blind tests) | Real-world dialogue naturalness |

(Conditional scenario) Hybrid workflows—closed for fidelity, open for customization—emerge as the dominant strategy where IP controls permit.

Framework: Low-Risk vs. High-Risk Deployments

- Low-risk (helps): Internal training videos, prototype marketing assets, closed models with human review gates, and when consistency outweighs customization.

- High-risk (backfires): Customer-facing content, regulated sectors (e.g., finance, healthcare), open fine-tuning without audits, and incentives that prioritize speed over accuracy.

The advice to “start small with open-source” fails due to organizational inertia and incentive distortion, leading teams to overly rely on automation and worsen biases from training data.

What Actually Works (and When It Doesn’t)

Non-obvious tactics:

- Prompt versioning as a governance artifact: Log prompts as auditable records (context: traceability in regulated use; risk: overlooked misuse; failure mode: unlogged iterations evade review).

- If compliance dominates, this method helps; if creativity dominates, it backfires (stifles iteration).

- Hybrid human-AI review loops: generate drafts with closed models and refine via human edits (context: bridges lab-real gaps; risk: negative value from overediting artifacts; failure mode: humans introduce inconsistencies AI avoids).

- When not to deploy: High-volume personalization without oversight (trust erosion).

- Reference-frame anchoring: Use enterprise-owned images/videos as inputs for consistency (context: mitigates hallucinations; risk: IP leakage in cloud APIs; failure mode: poor anchors amplify biases).

- Human intervention adds negative value when overriding physics-accurate outputs for “style.”

Avoid using this technology for content that is crucial for decision-making, such as evidence in court or training, as deepfake risks have been measured in benchmarks, but their full effects remain unknown.

Image integration (3/3):

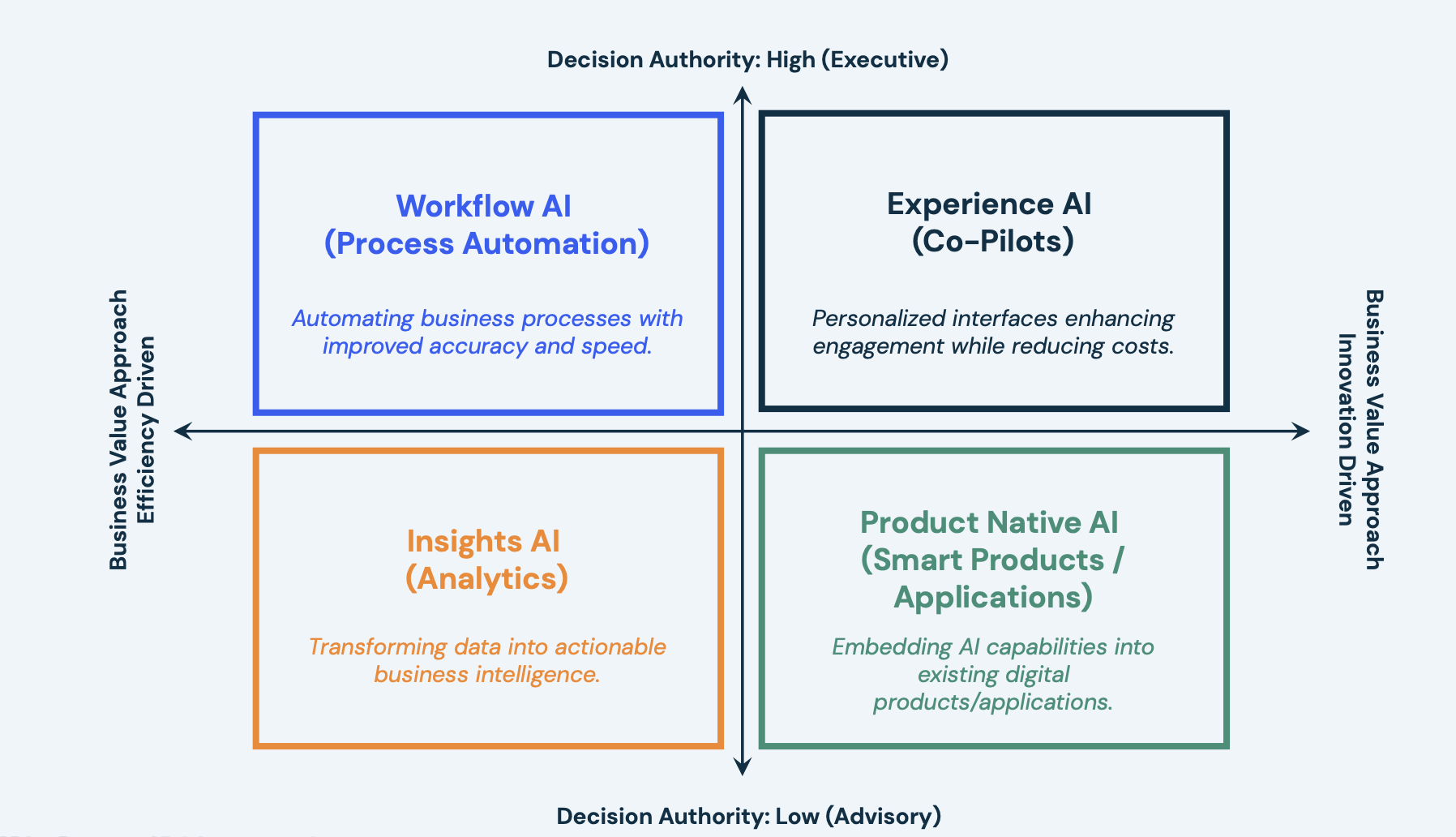

The Four-Quadrant AI Framework: Your Strategic Guide to Enterprise …

This enterprise AI implementation framework matrix distinguishes strategic quadrants (e.g., high-impact/high-risk). It looks at video generation by breaking down what can be measured, like cost and quality, from what can’t be easily measured, like rules and

Outlook: Conditional Scenarios for 2026

There are no predictions; instead, there are tempered trajectories with a 30–50% likelihood according to expert consensus in the reports.

- Acceleration scenario (if compute efficiencies scale): In the acceleration scenario, real-time generation and widespread use of native audio and longer clips will be enabled by open frameworks like TurboDiffusion, while facing constraints such as regulation (e.g., deepfake disclosure mandates) and trust erosion.

- Plateau scenario (if data/regulation binds): Incremental gains in fidelity occur, while open-source initiatives enhance hybrid usage; however, challenges include organizational inertia and quality limitations.

- Constrained scenario (if risks materialize): Restricted deployments in regulated sectors; upper bounds: minute-long clips under ideal conditions.

Compressed Synthesis

In late 2025, AI video generation for enterprises offers measurable fidelity gains via closed models but trades off against governance, cost, and integration frictions; hybrid strategies mitigate risks where partial observability permits, but deployment success hinges on disciplined human oversight rather than technological maturity alone.

Technologies rarely fail because they lack realism; they fail because organizations overestimate controllability under uncertainty.

Embedded video (end of post):

In this December 2025 discussion from the Supermicro Open Storage Summit, Keith Pijanowski (MinIO) and Gary Brown (Intel)—experts in high-performance storage and infrastructure—analyze generative AI enterprise infrastructure challenges, including scalability for video workloads. View through the lens of operational constraints (e.g., latency vs. quality trade-offs) and real-world deployment barriers discussed in the video.

20 target keywords: AI video generation, enterprise deployment, 2025 advancements, closed-source models, open-source video AI, trade-offs analysis, Sora 2, Veo 3, Runway Gen-4, Kling AI, Luma Ray3, governance risks, hybrid workflows, decision matrix, conditional scenarios, deepfake regulation, benchmarks 2025, organizational frictions, real-time generation, uncertainty in AI

Rigor is not what you add at the end; it is what constrains every sentence before it exists.